Keep our news free from ads and paywalls by making a donation to support our work!

Notes from Poland is run by a small editorial team and is published by an independent, non-profit foundation that is funded through donations from our readers. We cannot do what we do without your support.

An international study has found Polish to be the best language for carrying out complex AI tasks. The authors admit that the result was “surprising”, as was the fact that English ranked only sixth among the 26 languages tested

The research, conducted by AI experts from Microsoft, the University of Maryland, College Park, and the University of Massachusetts Amherst, presented a new benchmark for assessing the performance of large language models (LLMs) across different languages.

Jesteśmy najlepsi 😎 w AI 🇵🇱 https://t.co/HpzEBtSBMn @rzeczpospolita pic.twitter.com/M8v5pHaL4S

— Res Futura Data House (@Polityka_wSieci) October 25, 2025

LLMs are AI programs trained on vast amounts of data, enabling them to perform tasks such as text recognition, generation and translation. They are what power the most publicly visible form of AI, chatbots such as ChatGPT, Gemini and Grok.

The scholars tested how six LLMs – from OpenAI, Gemini, Qwen and Llama – performed when responding to the same inputs in 26 different languages. They found what they described as “several surprising and counterintuitive results”.

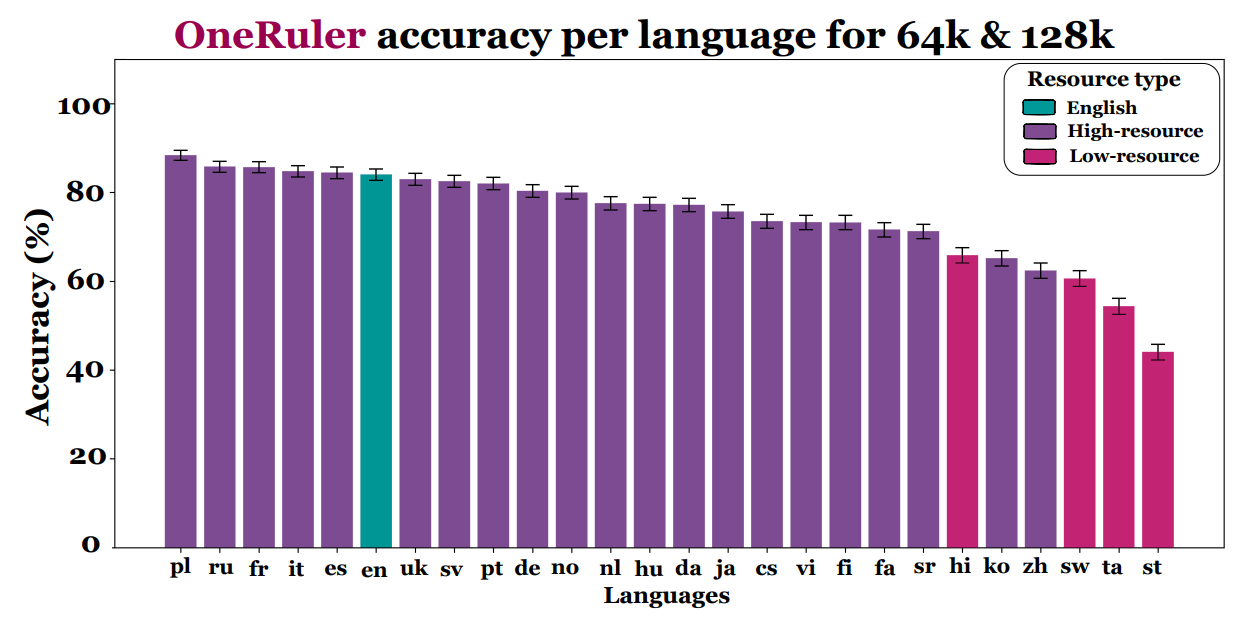

One was that, in the most complex tasks, involving processing large amounts of text, Polish finished top, with average accuracy of 88%. English was only sixth, with 83.9%, while Chinese – which, like English, is often used to pretrain LLMs – was the fourth worst performer, with 62.1%.

“English and Chinese dominate the pretraining data…and so we might expect them to be the top-performing languages,” wrote the researchers. “However, at context lengths of 64K and 128K [i.e. the most complex tasks], we unexpectedly observe that Polish is the top performer.”

The second-best performer was Russian, followed by French, Italian and Spanish. The worst performer was Sethoto, just below Tamil and Swahili.

The researchers found that the top-performing language families were Slavic (to which Polish and Russian belong), Romance (which includes Spanish and French), and Germanic (such as English). By contrast, Bantu languages (such as Swahili and Sethoto) fared badly despite having over 350 million speakers.

The performance of each language in the most complex tasks (source: “One ruler to measure them all: Benchmarking multilingual long-context language models”, Yekyung Kim, Jenna Russell, Marzena Karpinska, Mohit Iyyer)

The authors believe that some of the factors influencing the relative performances of languages are the type of script they use (with Latin or Cyrillic script languages performing better) and whether they are “high or low resource languages”, meaning how much data is available to train AI in their use.

Earlier this year, Poland’s government launched the Polish Large Language Model (PLLuM), which is freely available for all to use and is intended to support the development of artificial intelligence.

One of the aims of the project is to support the provision of public services in Poland. In August, the city of Częstochowa became the first to begin using PLLuM to enable faster writing of official letters, analysis of inquiries from residents, and summarising of long documents, among other tasks.

Poland’s government has launched a Polish Large Language Model (PLLuM) that is freely available and intended to support the development of AI.

In particular, it will be used to create virtual assistants intended to help provide public services https://t.co/z0gdWkM4OH

— Notes from Poland 🇵🇱 (@notesfrompoland) February 24, 2025

Notes from Poland is run by a small editorial team and published by an independent, non-profit foundation that is funded through donations from our readers. We cannot do what we do without your support.

Main image credit: Jernej Furman/Wikimedia Commons (under CC BY 2.0)

Daniel Tilles is editor-in-chief of Notes from Poland. He has written on Polish affairs for a wide range of publications, including Foreign Policy, POLITICO Europe, EUobserver and Dziennik Gazeta Prawna.